Blog/Article

The power of Kubernetes on bare metal

Kubernetes, often abbreviated as K8s, is a powerful container orchestrator designed to handle the complexities of modern distributed workloads, which can be used even on a bare metal server.

It provides a robust framework for managing containerized applications across a cluster of physical or virtual machines, leveraging the underlying power of bare metal servers to deliver scalable, resilient, and highly available applications.

Summary

This article will explore the core components of Kubernetes and how it harnesses the capabilities of bare metal to enhance performance, reliability, and resource management. If you already know you need it, sign up and enjoy what Latitude.sh has to offer.

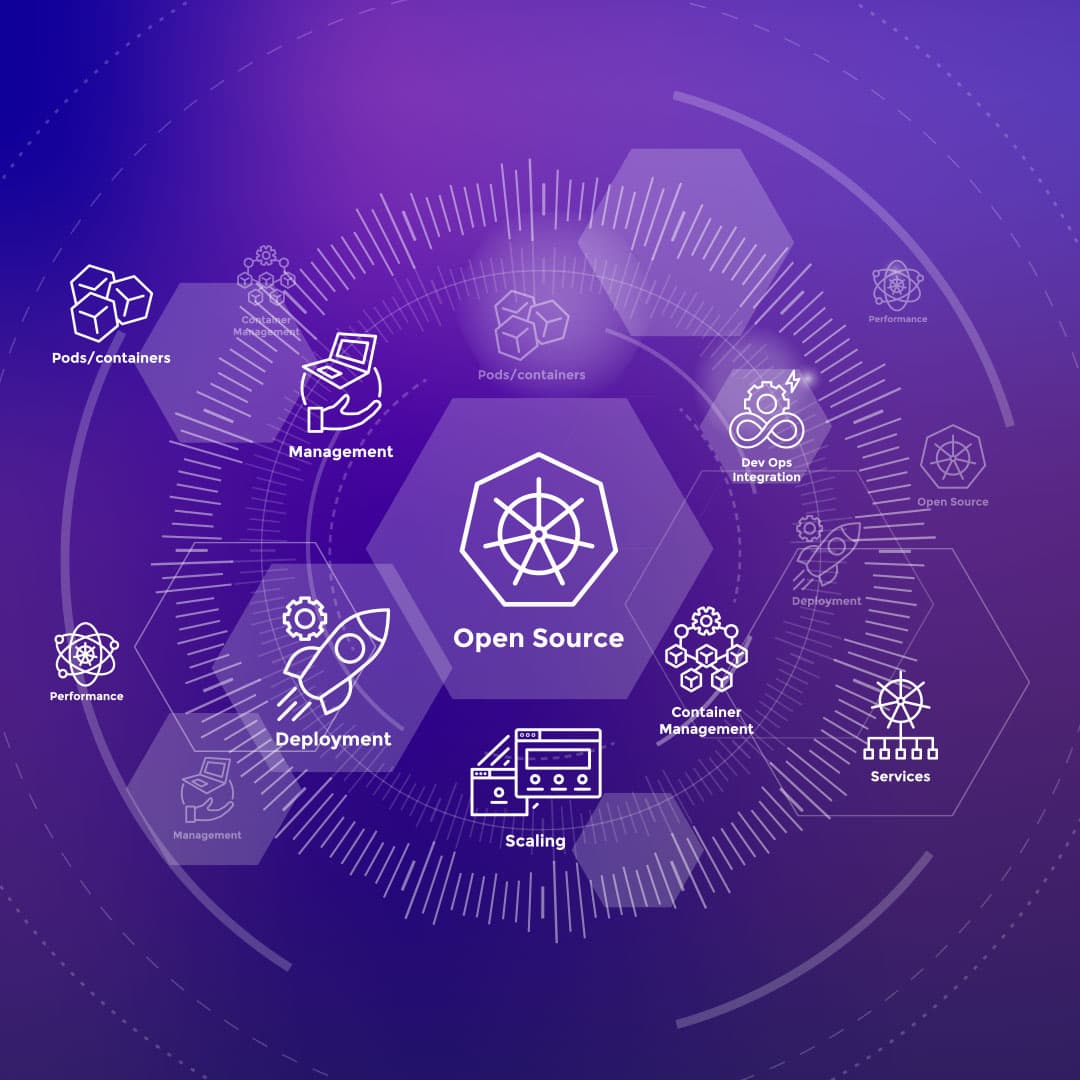

The Fundamentals of Kubernetes

Kubernetes is known for its extensive set of features that streamline the deployment and management of applications. It addresses key challenges such as service discovery, load balancing, self-healing, and automatic scaling.

By abstracting the underlying infrastructure and its inherent complexities, Kubernetes simplifies the process of managing applications at scale. Key components of Kubernetes include:

Service Discovery and Load Balancing: Kubernetes manages networking, ensuring that containers can communicate with each other regardless of where they are within the cluster. This feature is critical for maintaining high availability and reliability.

Automatic Bin Packing: Kubernetes analyzes the resources’ requests and limits, and ensures that applications are placed on optimal nodes within the cluster, efficiently using hardware components like CPU, memory, and storage.

Storage Orchestration: It handles persistent storage for applications through a Container Storage Interface (CSI), abstracting the complexities of different storage types and ensuring data integrity.

Before you deploy a server, you should read:

Why should you try RKE2 as your K8s distribution on bare metal

Why Your Bare Metal Kubernetes Cluster Needs Better Monitoring Tools

How to Master Kubernetes Load Balancing: MetalLB + Cloudflare Setup

Kubernetes on Bare Metal: 7 reasons to use Longhorn for persistent storage

Kernel Tuning for Kubernetes Nodes: What Really Impacts Latency

Self Healing: Kubernetes automatically manages and repairs failed components within the cluster, ensuring that applications remain available. It does so by restarting failed containers, rescheduling pods on different nodes when a node fails, and replacing pods that fail the health checks.

Secret and Configuration Management: It provides secure management of sensitive information like passwords and configurations, ensuring they are only accessible to the right entities within the cluster.

Automated Rollouts and Rollbacks: Kubernetes automates the process of updating applications, rolling out new versions, and reverting changes if something goes wrong, reducing downtime and ensuring smooth deployments.

Batch Execution: Kubernetes can manage batch jobs efficiently, such as data processing tasks, ensuring that such workloads are handled without manual intervention.

Kubernetes Architecture

Kubernetes operates on a control plane—worker node architecture, which is crucial for managing distributed workloads. The control plane component, which includes the API server, controller manager, and scheduler, is responsible for managing the cluster and delegating tasks to the worker nodes.

The control plane manages how and where applications are scheduled, ensuring that the desired state of the cluster is maintained.

Control Plane: The control plane, previously known as the master node, is a component that manages the cluster’s state through several key processes:

API Server (kube-apiserver): This is the primary interface for interacting with the Kubernetes cluster, providing the means to manage resources within the cluster.

Controller Manager (kube-controller-manager): It continuously monitors the state of the cluster and enforces the desired configuration, ensuring that resources like deployments and services remain healthy.

Scheduler (kube-scheduler): This component is responsible for deciding where new applications should be deployed within the cluster based on resource availability and constraints, and node-affinity/anti-affinity rules.

etcd: A distributed key-value store that serves as Kubernetes' primary data store, maintaining cluster state and configuration

Worker: The worker nodes run the containers and handle the application workloads. Each worker node is equipped with three critical components:

Kubelet: It communicates with the control plane to receive tasks and report status.

Kube-Proxy: Exposes the services within the cluster to the external world, ensuring that applications can communicate across the network.

Container runtime: Manages the lifecycle of containers on the node, handling tasks like pulling images and starting, stopping, and managing containers. Popular runtimes like Containerd interface directly with the host operating system to create and manage containers.

These components work together to ensure that the cluster is both highly available and resilient. The Kubelet handles the actual management of containers while Container runtime executes them. Meanwhile, Kube-proxy manages network traffic, making sure applications can communicate effectively.

Deployments and Containers

The essence of Kubernetes is its ability to manage deployments and containers efficiently. Kubernetes containers allow for a high level of isolation between applications, ensuring that a failure in one does not affect others.

Deployments within Kubernetes abstract these containers into a set of resources, allowing for features like self-healing and automated updates.

Pod: The basic unit in Kubernetes, a pod, represents a single or a group of containers running on the cluster. Pods encapsulate all necessary resources and the configuration for a running application.

Services: Kubernetes services abstract the networking layer, exposing a set of pods under a single network endpoint. This abstraction allows for easy scaling and load balancing across containers.

Higher-Level Abstractions: Beyond pods, Kubernetes offers additional abstractions such as ReplicaSets, Deployments, StatefulSets, DaemonSets, and Jobs. These higher-level constructs build on basic pod functionality to provide additional features like horizontal scaling, stateful deployments, and batch job management.

How Kubernetes Leverages Bare Metal

The power of Kubernetes is fully realized when it is paired with bare metal servers. Bare metal provides the physical infrastructure needed to support the high demands of containerized applications. Kubernetes leverages this infrastructure to:

Optimize Resource Utilization: By managing containers across multiple physical servers, Kubernetes ensures efficient use of CPU, memory, and storage resources, preventing resource contention and bottlenecks.

Enhanced Performance: Direct access to physical hardware allows Kubernetes to achieve better performance, especially for I/O-intensive applications.

The combination of Kubernetes and bare metal reduces latency and increases throughput, which is crucial for demanding workloads such as AI, machine learning, and real-time data processing.

Reliability and Scalability: Bare metal provides a stable foundation for running Kubernetes clusters, making them more resilient to hardware failures.

Kubernetes can self-heal and dynamically reconfigure workloads across available nodes, ensuring high availability even in the event of hardware failures.

In conclusion, Kubernetes, when combined with bare metal, provides a powerful solution for managing containerized applications.

Its ability to abstract and manage resources efficiently makes it a go-to choice for organizations looking to deploy and scale their applications in a reliable and cost-effective manner.

Whether for microservices, batch jobs, or stateful applications, Kubernetes on bare metal delivers the necessary performance, scalability, and reliability to meet modern business demands.

Latitude.sh offers a variety of options for deploying and managing Kubernetes, providing the flexibility and control businesses need to scale their operations effectively.

Whether you're looking to leverage the convenience of managed Kubernetes services or prefer the control of running Kubernetes on bare metal, Latitude.sh has you covered.

Kubernetes on Bare Metal with Rancher: For those who want the best of both worlds, control and performance,running Kubernetes on bare metal is an excellent choice.

Latitude.sh’s guide to setting up Kubernetes on bare metal with Rancher RKE2 combines the ease of managed Kubernetes with the performance and control of dedicated hardware. The setup is straightforward:

Requirements: You'll need at least 3 Latitude.sh servers for your cluster and one server for Rancher. Additionally, you'll need Kubectl and Helm installed on your local machine for managing the cluster.

This setup allows you to maintain complete control over your infrastructure while benefiting from Rancher’s intuitive management capabilities. It's perfect for organizations that have matured to a point where managing their infrastructure is a priority.

Terraform Plan: For teams looking for a more hands-on approach, Latitude.sh offers a Terraform plan to deploy Kubernetes on bare metal.

Managed Kubernetes services like EKS, GKE, and AKS are popular because they simplify setup, but they come with vendor lock-in. Running Kubernetes on Latitude.sh with Terraform gives you the freedom to manage your own resources directly.

Requirements: At least 2 Latitude.sh servers and Kubectl installed on your workstation. This setup enables you to use Terraform's infrastructure as a code approach to define and manage your infrastructure resources programmatically.

Load Balancing with MetalLB and Cloudflare: Once you have your Kubernetes cluster set up on bare metal, distributing incoming traffic to your applications is crucial.

Latitude.sh offers a guide for setting up load balancing with MetalLB and Cloudflare. This setup ensures that no single server becomes overwhelmed with traffic, thereby improving the performance and reliability of your applications.

Requirements: You’ll need a Cloudflare account for DNS and load balancing, and a Kubernetes cluster deployed on Latitude.sh.

The guide walks you through the process of setting up a load balancer, which is critical for maintaining availability and scalability in your Kubernetes environment.

Setting Up Persistent Storage for Kubernetes with Longhorn: For applications that require reliable data storage, Latitude.sh provides a guide for setting up persistent storage with Longhorn.

Longhorn is a distributed block storage system that integrates seamlessly with Kubernetes, providing lightweight replication and persistence for your workloads.

Requirements: A Kubernetes cluster with at least 3 nodes, Kubectl installed on your workstation, and Helm for installing Longhorn. This setup ensures that your applications can handle persistent data storage needs without relying solely on external storage solutions.

Building a Private Cloud on Bare Metal with Harvester: For those looking to replicate the benefits of cloud agility but within their own infrastructure, Latitude.sh offers a guide to building a private cloud on bare metal using Harvester.

Harvester is an open-source hyper-converged infrastructure (HCI) solution that simplifies managing virtual machines and Kubernetes clusters on your servers.

Requirements: At least 3 Latitude.sh servers, Harvester iPXE script for cluster setup, and a suitable IP address range. This setup provides the flexibility and control of a private cloud while avoiding the limitations often associated with public cloud providers.

Latitude.sh’s variety of deployment options, from managed Kubernetes services to running Kubernetes on bare metal, ensures that organizations can choose the right setup that aligns with their needs.

Whether you need the simplicity of a managed service or the control of bare metal, Latitude.sh provides the tools and guidance to help you succeed.

Other options besides bare metal

When considering how to deploy Kubernetes, there are a variety of options available, each catering to different organizational needs and priorities.

Understanding these alternatives is crucial for businesses to make informed decisions about the best method for their specific requirements.

Another common deployment method is running Kubernetes on virtual machines (VMs) instead of bare metal. This approach involves setting up the Kubernetes control plane and worker nodes on existing VMs within a cloud provider's infrastructure or in a local virtualized environment.

Deploying Kubernetes on VMs offers significant flexibility, allowing businesses to integrate their existing virtualized setups with Kubernetes seamlessly.

This can be particularly beneficial in hybrid cloud environments, where businesses wish to take advantage of their existing infrastructure investments.

It also provides a level of control over the underlying infrastructure that is not possible with managed services, as organizations have the flexibility to tweak settings and configurations to better suit their needs.

Despite these benefits, running Kubernetes on VMs introduces its own set of challenges. The operational overhead is higher compared to managed Kubernetes, as IT teams must handle not only the management of Kubernetes but also the maintenance of the VMs themselves.

This can be resource-intensive, especially for organizations without extensive Kubernetes expertise. Furthermore, VMs tend to be more resource-heavy compared to bare metal setups, which can diminish some of the performance gains associated with Kubernetes.

Virtualization adds an additional layer of complexity that may result in increased latency and resource contention, ultimately affecting the efficiency of workloads running on Kubernetes.

Deploying Kubernetes directly on public cloud instances is another option, where Kubernetes runs directly on the virtual machines within the public cloud providers' environments.

Businesses can take advantage of the public cloud’s pay-as-you-go pricing model, paying only for the resources they consume.

This makes Kubernetes on public cloud instances a cost-effective choice for many organizations, especially when rapid scaling is not a primary requirement.

However, similar to using VMs, this approach requires businesses to manage the operational tasks themselves, such as patching, upgrades, and scaling, which can introduce additional overhead.

The control and flexibility are greater compared to managed services, but managing Kubernetes alongside public cloud resources can still be challenging for organizations without dedicated Kubernetes expertise.

Despite these alternatives, deploying Kubernetes on bare metal offers a unique set of advantages that can be particularly compelling for organizations that require high performance, control, and cost efficiency.

Bare metal Kubernetes provides organizations with direct access to physical hardware, eliminating the need for virtualization overhead.

This direct access means there are no additional layers between the application and the hardware, resulting in lower latency and better overall performance.

The absence of a hypervisor layer allows Kubernetes to fully utilize the physical resources of the server, leading to more efficient and responsive workloads.

For performance-sensitive applications, such as those in gaming, financial trading, or machine learning, bare metal Kubernetes is often the superior choice.

Moreover, bare metal Kubernetes provides businesses with greater control over their infrastructure. IT teams have the ability to configure and manage the physical servers directly, without being tied to the operational policies and constraints of cloud providers.

This level of control is particularly valuable for businesses with complex or custom application requirements. All while offering the benefit of decresed vendor lock-in. Organizations are not constrained by the ecosystem of a single cloud provider, giving them the freedom to move workloads as needed without significant cost penalties.

For businesses with multi-cloud strategies or those wanting to avoid the complexity and costs of managed services, running Kubernetes on bare metal allows for a more flexible and customizable deployment.

Sign up with Latitude.sh

In conclusion, while managed Kubernetes services and deploying Kubernetes on VMs or public cloud instances provide valuable options, Kubernetes on bare metal offers distinct advantages for organizations looking for high performance, control, and cost efficiency.

Each deployment method has its strengths and weaknesses, and the best choice depends on the specific needs and resources of the organization.

Understanding these options and evaluating them in the context of business requirements will enable IT teams to make the best decision for their Kubernetes deployment.

To take full advantage of what bare metal and Kubernetes have to offer, create a free account now.