Blog/Article

What is a cloud on-ramp and how do direct connections work

The public internet is not the only digital connection available out there, as you may well know. Applications can also be accessed via private networks, through direct connections, aka cloud on-ramps.

In a data center, multiple servers are positioned nearby, allowing them to connect directly via cables when needed. This setup provides an exceptionally secure and fast connection between servers, ensuring low latency and privacy—two pillars of the digital world.

Summary

By the end of this article, you'll fully understand how cloud on-ramp works, why it can benefit your business, and how easily you can activate it with Latitude.sh. Your ideal setup is just a few clicks away. Sign up on Latitude.sh.

What is a Direct Connection?

Whenever you use the internet, you’re essentially navigating through a series of cables and signals to reach the applications and websites stored elsewhere.

There’s no exclusivity; many users share the same paths, such as the famous submarine cables. This shared infrastructure is why minor loading delays are so common.

Server overload due to excessive requests and high latency from the geographical distance between servers and end users are standard issues on the public web.

Now, imagine if you could connect your server directly to a cloud provider, bypassing the internet to access what you need faster, with lower latency and greater security.

This is where a cloud on-ramp, also known as a direct connection, comes in. With cloud on-ramps, the servers you are using can be directly connected to a cloud provider, ensuring exclusive access, unhindered by other users or delays.

How does a direct connection work?

The internet, often thought of as a mythical entity, is essentially a vast network of submarine cables connected to continental providers, which deliver internet signals to end users and servers.

All the data, websites, applications, and everything else on the internet is stored in physical devices known as servers, and a server is essentially a powerful computer that users can access remotely. This may sound too basic, but it’s essential for understanding how direct connections work.

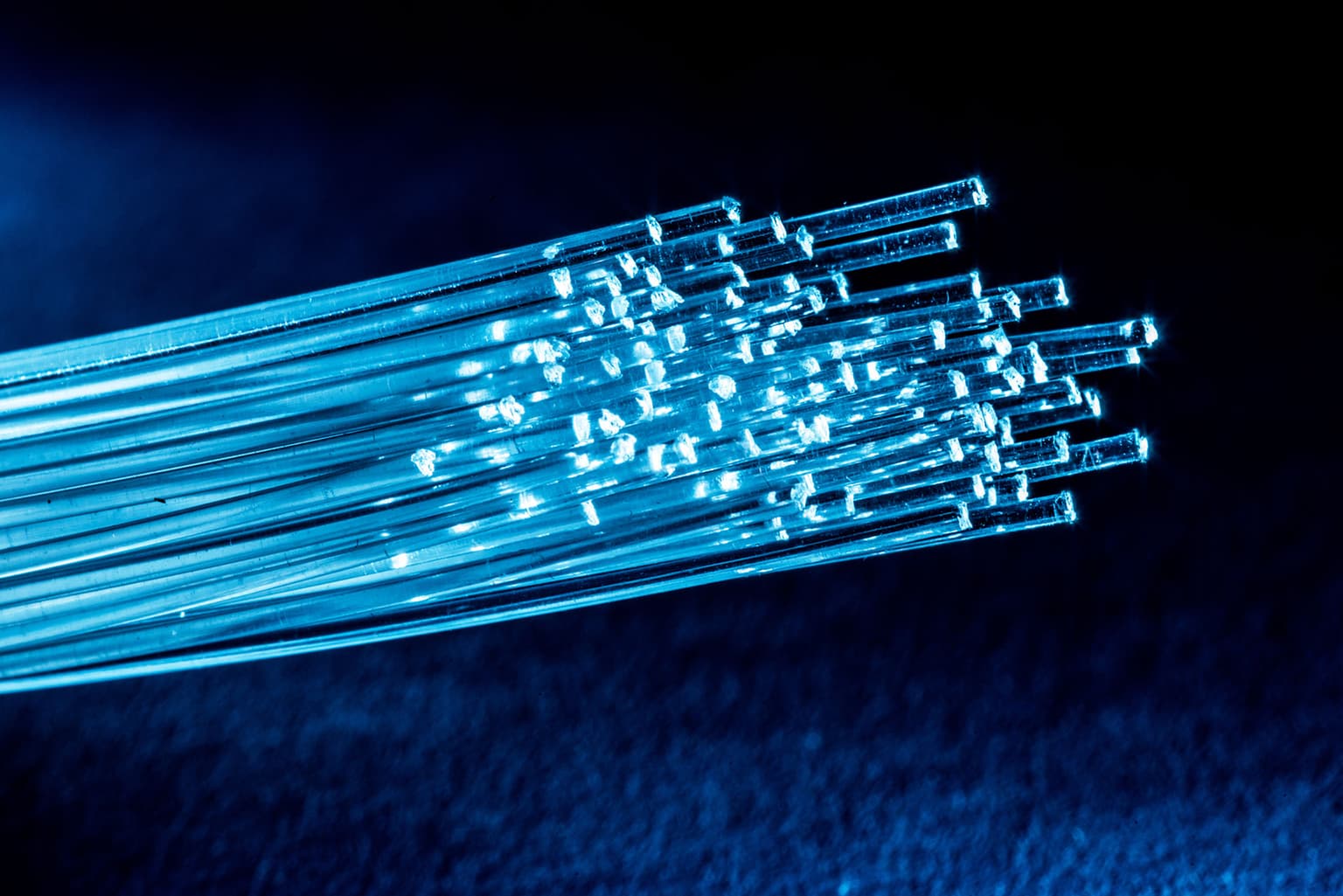

Like any computer, a server may have a CPU, a GPU, and multiple cables that connect it to different layers within the data center’s digital infrastructure.

If your application is hosted on a server located close to a cloud provider’s server, you can connect these machines directly through a private connection.

This private connection consists of actual wires linking one server to the other. Known as a direct connection, it is accessible only to those with permissions to the application and the cloud provider.

There are no third parties involved, no other users competing for bandwidth—it’s exclusively between you and them. This is what makes the concept of a hybrid cloud possible.

With time, this concept has evolved and specialized network vendors started to develop their own private backbone of connections, enabling an alternative to the traditional local on-ramps by connecting sites that were physically apart from each other.

A great example is Megaport, the leading provider of such network connections, as they offer private connectivity between different data centers and therefore direct access from one cloud provider to another, increasing the reach of a hybrid cloud setup.

What is a hybrid cloud?

By now, you must know that private clouds are made for one single-tenant, which means the server in which applications are stored is not shared with anyone, as opposed to a public cloud.

In a hybrid cloud, the applications and workloads are shared between a private cloud and a public cloud. For data to go back and forth between the public cloud and the private cloud smoothly, these servers must have a strong connection between them. Hence, cloud on-ramps.

A hybrid cloud platform brings your organization substantial benefits by combining the best of both private and public cloud environments.

It offers greater flexibility, allowing you to run workloads in the environment that best suits your needs—whether it's on-premises for sensitive data or in the cloud for scalable, resource-intensive tasks.

This flexibility enhances your control over data and processes, as you can choose where specific data and applications reside, ensuring they align with security and compliance requirements.

A hybrid cloud also enables scalability, allowing your infrastructure to scale up and down according to demand without the need for significant capital investment in on-premises hardware.

The global reach of a hybrid cloud allows you to deploy resources closer to end-users, improving performance and reducing latency.

Integrated cross-platform security and unified compliance measures are built into the platform, helping you meet regulatory standards while protecting sensitive data across environments.

Finally, by leveraging both on-premises and cloud resources, hybrid cloud platforms drive cost efficiencies and improve operational workflows.

What is multi-cloud?

This is where it might become a little confusing. A hybrid cloud combines both a private provider and a cloud provider to handle your workload together as if they were complementing each other.

When it comes to a multi-cloud, though, you still use more than one cloud provider, but they usually handle separate workloads and do not interact with each other.

In a hybrid cloud, you might have the same data moving between environments, whereas with a multi-cloud solution, you have different providers managing separate applications based on each provider's strengths.

For instance, one provider might host applications that require advanced machine learning tools, while another could handle a company’s email infrastructure.

This setup increases flexibility and reduces dependency on a single provider, helping to avoid vendor lock-in and optimize costs.

How to get started with direct connections?

Latitude.sh, as a leading bare metal provider, offers a powerful solution for privately connecting your servers to major cloud providers through Cloud Gateway, a collaboration with MegaPort.

With Cloud Gateway, you can securely connect to services like AWS, Google Cloud, and Microsoft Azure without exposing your resources to the public internet, ensuring a private and dedicated connection to your cloud infrastructure.

By moving your servers to Latitude.sh, you can also achieve an average cost reduction of 60% on compute and bandwidth expenses when compared to AWS, allowing you to maximize your budget without compromising on security or performance.

Latitude.sh Cloud Gateway serves as a scalable on-ramp to cloud providers, integrating smoothly with AWS, Azure, GCP, and various other partners.

The infrastructure is designed to maintain fast and consistent performance, ensuring that your applications can run with the lowest possible latency, as it connects your servers in as few hops as possible.

Additionally, Latitude.sh provides the flexibility to connect programmatically through APIs, allowing your Cloud VPC to seamlessly interact with Latitude.sh resources and automate workflows across your infrastructure.

Latitude.sh’s Cloud Gateway makes it possible for businesses to harness the power and performance of bare metal with private, secure cloud connectivity—optimizing both costs and speed to meet even the most demanding infrastructure needs.

You can create a free account right now and see for yourself.